So last week I had the great opportunity to test-drive the truly futuristic device that is Google Glass. Similar space-age accessories have appeared in countless science fiction films and novels, but let me tell you, Google Glass is very real, very functional, and may be coming very soon.

The Basics

Let’s get this out of the way. Right now, Google Glass is in “open beta”, meaning it’s basically being marketed to developers at the moment. Technically, anyone can purchase one here, but at $1,500 I can’t say it’s worth it. Do not be alarmed, however, the price will most likely be around $400-$500 once it officially launches for consumers.

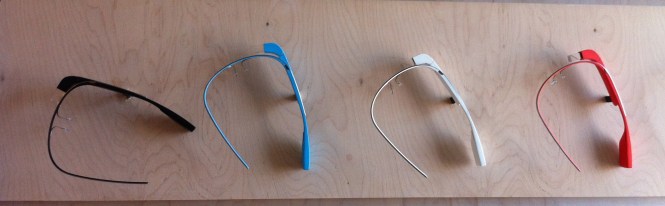

Glass is basically a small computer in the shape of eyeglass frames. It can be worn with or without a lens (frames can be customized – they slip right on and off). It doesn’t have the full capabilities of your smartphone however. It cannot call or text people without it being tethered to your mobile device via bluetooth.

So you probably still need to carry your phone around. More importantly, Glass only comes with wifi at the moment, it can’t receive cellular signal (so no 3G or LTE). This means that Glass will use your smartphone’s data plan (via bluetooth) when it needs to ping the web. So Glass and your smartphone work together.

It Feels Magical

The first time you put Glass on, you will feel like a cyborg. One way to describe it is that it feels like a “natural” extension to your body. You don’t have to fidget around your purse or pocket to access it – as you would your phone – but instead, all you have to do is look slightly up and to the right. To navigate, you can use the touchpad no the right side of the frame. Currently, three gestures: down, forward, and tap allow you to either close, scroll and select what’s on the screen.

The screen is small enough that you actually don’t notice it at all if you’re looking straight ahead. It almost entirely disappears from your sight, unless you look slightly up. Although it definitely takes some getting used to, it does not feel intrusive at all.

On the main screen, you can use the voice command “Okay Glass” to bring up a list of commands onto your screen, which you can then dictate to the built-in microphone. Commands range from take a picture, record a video, give me directions, and perform a Google search. I had limited time with the device, but I could already see the potential in the near-instant speed at which I could do these any of these things.

I am highly interested in the photo and video taking capabilities. Currently, I have to physically whip my phone out of my pocket, start the camera app and tap a button to take a picture. Also, while recording a video, the phone’s screen is basically covering my view. Having Glass mounted onto your face not only frees your hands up, but also frees up your line of sight. It doesn’t seem like a big deal, but it’s actually phenomenal when you first experience it. Since the beginning of time, humans have captured moments by holding up a rather large camera to their face and snapping a photo. With Glass, it becomes second nature. You simply say the command.

The idea of getting instant directions is also helpful. I was just in Washington D.C. this past weekend and I had to look up walking directions to museums and monuments via smartphone. Instead of looking down at my phone, I could’ve been looking up at the sidewalk full of people and the street signs that could help direct me, all while getting directions spoken into my ear by Google Glass.

Put My Money Where My Mouth Is

So it seems that all I have for Glass is praise. Why didn’t I walk out with a fresh pair when I visited Google’s Chelsea Market location last week? Mostly because of the price. I can’t stomach spending $1,500 on a piece of technology that I want. That’s the rub. No one actually needs this thing. It’s purely for convenience, akin to a bluetooth headset. There’s a huge potential for Glass to be used in a professional setting. Think of people that need instant information like surgeons, soldiers and firemen. They could really use such a tool, but as for the layperson, it’s just for convenience.

Will I get one at $400? You betcha’, but that’s just because I’m a technophile.